Imagine that you're storing data as a sequence of values. In Python, you may consider using built-in data types like lists, sets, tuples, or dictionaries. Then, you can loop over these things in a for-loop and do some stuff.

Whatever you use those "things", they mean something Iterable. And the process of looping over the items is called Iterating.

names = ["Python", "Java", "Typescript", "Rust"] # Iterable object

for name in names:

pass

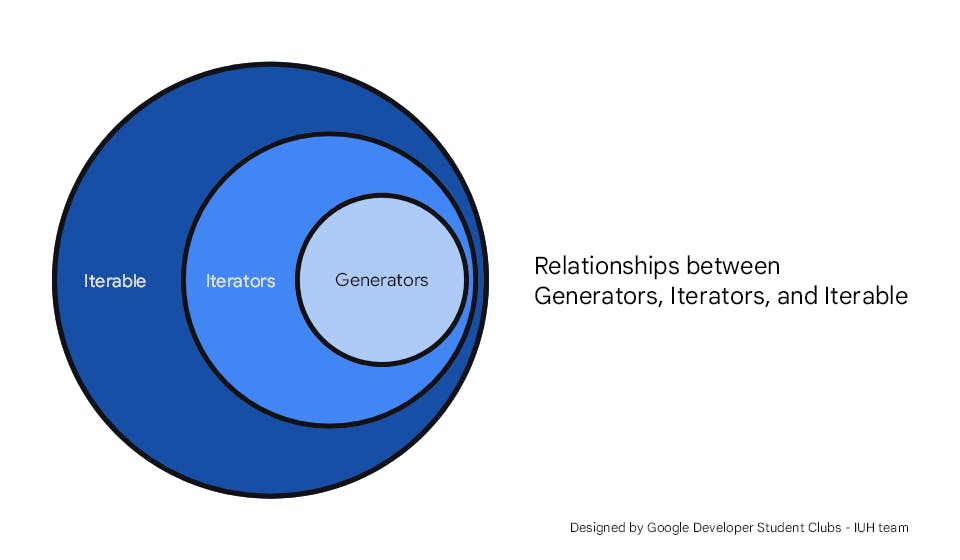

Before we move on to discuss Generators in Python and how it helps efficiently loop in the large Iterable things, we can start with the following diagram such that we can have a bigger picture by understanding related concepts. The figure shows you the relationships between generators, iterators, and iterable.

Iterable and Iterator are often confused for one another, however, they are two distinct concepts.

Iterable

An iterable object (or simply an iterable) provides a mechanism to allow other data consumers to access its elements sequentially, which is iterating and retrieving elements in a way that follows a particular order.

An iterable object implements the __iter__() method and returns an iterator. However, if the __iter__() method is not defined, Python will use __getitem__() instead.

All types belonging to the class of collections are iterable. Some iterable objects are mutable and some are immutable.

Beyond lists, sets, tuples, and dictionaries, some common sequences are iterable such as strings and bytes. Example:

for character in "Python":

print (character)

Iterator

An Iterator is an object which uses the __iter__() and __next__() methods, this is called the Iterator protocol. It is an iterable object with a state, or in other words, it remembers what step is at during iteration.

The best part of an iterator is not to generate the whole collection all at once and consume memory for it, rather the element is generated only when it is being demanded from an iterator.

An iterator serves elements sequentially. That means it can only move forward, and cannot go back or reset itself. If there're no further elements, the exception will be thrown whatever its name depending on the programming language you use.

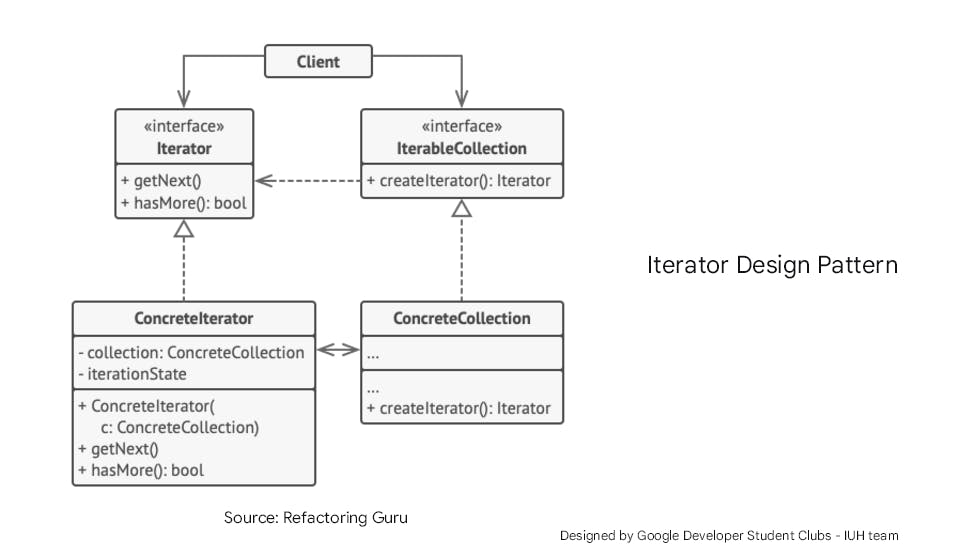

Iterator is also one of the behavioral design patterns and has been presented in the built-in collection classes/interfaces of all popular programming languages on Earth, including Python. It lets you traverse elements of a collection without exposing its underlying representation.

If you used to work with Java Database Connectivity (JDBC) in Java or any kind of APIs you used to perform read/write data from the database programmatically, you maybe recognize this pattern.

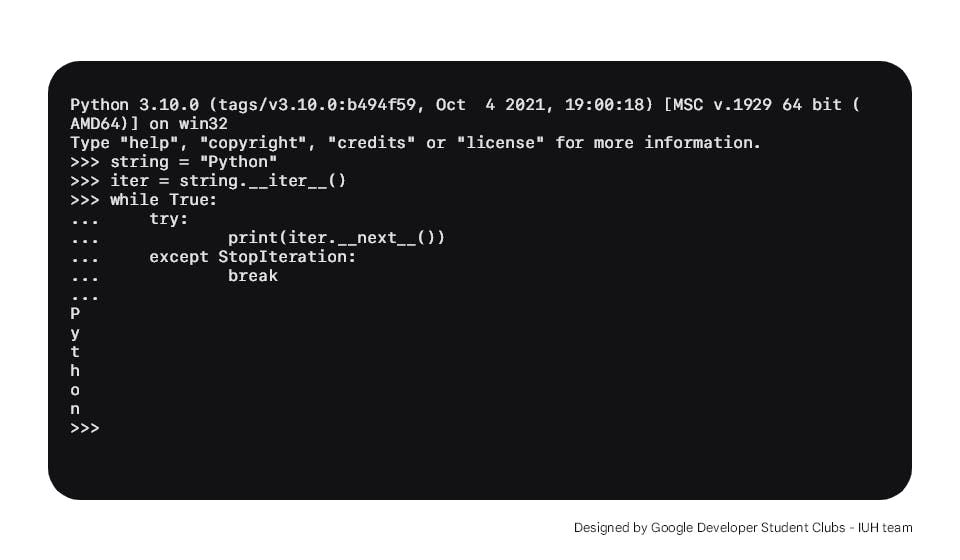

Back to Python, I'll show you in the snippet below:

# create a string

string = "Python"

# get an iterator of string object

iter = string.__iter__()

while True:

try:

print(iter.__next__())

except StopIteration:

break

As we can see in the snippet above, we create the collection as a string and get an iterator from the instance using the __iter__() dunder method. Whenever __next__() dunder method of the iterator is invoked, it goes to the iterable and gets one more element. If there are no additional elements, the StopIteration exception will be thrown to stop the iteration.

After you run this code, the result is exactly when you loop all the characters of the string using the for-loop.

To deeply understand how Iterator works, let's create a class that auto-generates the first n Fibonacci numbers.

Since we know __iter__() and __next__() methods exist in Iterable objects, let's create a Fibonacci class, and initialize those methods:

class Fibonacci:

# Default constructor

def __init__(self):

pass

def __iter__(self):

pass

def __next__(self):

pass

Because we need to know how many first Fibonacci numbers we'd create, let's add a parameter in the constructor to define the number of loops we're going to iterate.

On the other hand, we also initialize the first and second Fibonacci numbers. Let's rewrite __init__() method:

def __init__(self, n):

self.count = n # number of loops

self.a = 0

self.b = 1

In this snippet above, self.count represents our loop number that remains. We re-compute a and b by the formulation declaration and decrease the count value once each loop.

When it is exhausted (as self.count is equal to 0), we raise StopIteration exception, let's rewrite __next__() method:

def __next__(self):

if self.count == 0:

raise StopIteration

self.a, self.b = self.b, self.a + self.b

self.count -= 1

return self.a

Since Fibonacci is an Iterable object, which __iter__() method is represented, we must return self value. If not, TypeError will be raised. Let's rewrite __iter__() method:

def __iter__(self):

return self

As we know so far, we can use for-loop to print all numbers that we just generated:

for number in Fibonacci(6):

print(number)

Here are the final code lets you generate the first n Fibonacci numbers:

class Fibonacci:

def __init__(self, n):

self.count = n # number of loops

self.a = 0

self.b = 1

def __iter__(self):

return self

def __next__(self):

if self.count == 0:

raise StopIteration

self.a, self.b = self.b, self.a + self.b

self.count -= 1

return self.a

for number in Fibonacci(6):

print(number)

Congratulations! We made it!

Unfortunately, there is a lot of boilerplate, and the logic has to be expressed in a somewhat convoluted way. This would be tough if writing all that just to get an iterator.

Generator - The bigger picture

The generator function uses yield statement to give output instead of return statement.

While return actually returns a value, yield always returns from a function without destroying the states of its local variable and when the function is called, the execution starts from the last yield statement. Any function that contains a yield keyword is termed a Generator. Hence, yield is what makes a generator.

Let’s see what a generator looks like with the following snippet:

def get_string_generator():

yield 'P'

yield 'y'

yield 't'

yield 'h'

yield 'o'

yield 'n'

string_generator = get_string_generator()

print(string_generator.__next__()) # P

print(string_generator.__next__()) # y

print(string_generator.__next__()) # t

print(string_generator.__next__()) # h

print(string_generator.__next__()) # o

print(string_generator.__next__()) # n

print(string_generator.__next__()) # StopIteration exeption

As you can see, they work the same as Iterator. But a much simpler fashion. We don't need to create a class to get an Iterator, we just create a normal function, and use Generator instead.

Back to the Fibonacci example earlier, here is how the function was rewritten by using Generator:

def first_fibonacci(n):

a, b = 0, 1

for i in range(n):

a, b = b, a + b

yield a

for number in first_fibonacci(6):

print(number)

And that works perfectly.

How Generator makes a difference

Let's write a function that generates the first Fibonacci number, once we compute the next Fibonacci number, we append it to a list and return that list when the loop is finished:

def first_fibonacci(n):

a, b = 0, 1

fibonacci = []

for i in range(n):

a, b = b, a + b

fibonacci.append(a)

return fibonacci

print(first_fibonacci(6))

Assume that the n number is really big, each number in the list of Fibonacci numbers takes up a lot of space, say 10 megabytes each. The more the number we generate, the more storage we need to store those numbers in memory.

This may lead our computer out of memory, which is not acceptable.

In Python, we can calculate how much memory space does variable takes by importing the sys module and using the getsizeof() method.

Here is the code you can copy and try out:

import sys

def first_fibonacci(n):

a, b = 0, 1

fibonacci = []

for i in range(n):

a, b = b, a + b

fibonacci.append(a)

return fibonacci

def first_fibonacci_generator(n):

a, b = 0, 1

for i in range(n):

a, b = b, a + b

yield a

print(sys.getsizeof(first_fibonacci(100000)))

print(sys.getsizeof(first_fibonacci_generator(100000)))

On a scale of 100000 numbers, that's a big gap while Fibonacci wrote in Generator only and always takes 112 bytes. On the other side, the normal function takes 824456 bytes.

The difference in memory usage is very significant. A generator only needs to know its current state and doesn’t need to load all its elements upfront, unlike lists and other iterable objects.

List comprehension and Generator expression

List comprehension allows us to create a list using for loop with lesser code. What normally takes 3-4 lines of code or more, can be compressed into just a single line.

Example:

list = [i for i in range(1, 100001)]

print(list)

# 1 2 3 4 5 6 7 8 9 10 ...

Generator expressions are somewhat similar to list comprehensions, but the former doesn’t construct the list.

Instead of creating a list and keeping the whole sequence in the memory, the generator generates the next element in demand. Generator expression allows us to create a generator without the yield keyword.

Thus we can say that the generator expressions are memory more efficient than the list does.

Parenthesis are used in place of square brackets.

Example:

list = (i for i in range(1, 100001))

print(list) # <generator object <genexpr> at 0x7fa4c51f23c0>

Generator drawbacks

Generator is powerful and more efficient than other ways we store data. But it's not ideal, and can only be used in some cases.

Let's say we have a generator and want to print this out to the console, we can use list comprehension or for-loop to retrieve its data. Eventually, the way we used Generator, in this case, is not efficient at all.

list = (i for i in range(1, 100001))

print([number for number in list]) # 1 2 3 4 5 6 7 8 9 10 ...

If you recall, the most important feature of a generator as an iterator is that it renders a value whenever it’s requested. Related to this feature is the computer concept called lazy evaluation or laziness, which means that specific operations are not executed until the need arises. In the case of generators, they don’t bother generating all possible elements until someone calls the generators to do so.

Recap

Generators are a subclass of iterators, which are all iterables

All of these types of concepts can be used in iterations

Generator functions use the yield keyword to render values once at a time

Generator expressions are a concise way to create generators, and they have the following syntax:

(expression for x in iterable)Because of the lazy evaluation nature, generators are very memory-efficient, making them particularly useful when we deal with data of a very large size

References:

Made with ❤️ by Google Developer Students Clubs - Industrial University of Ho Chi Minh City team